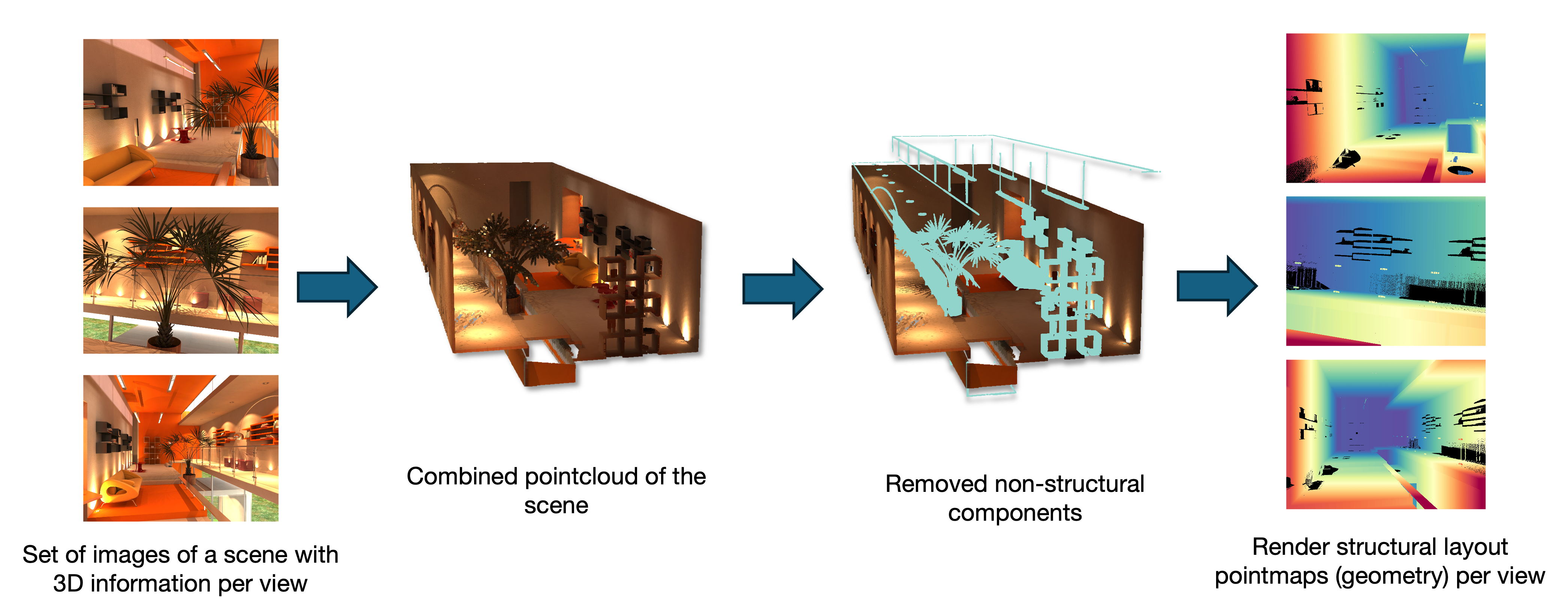

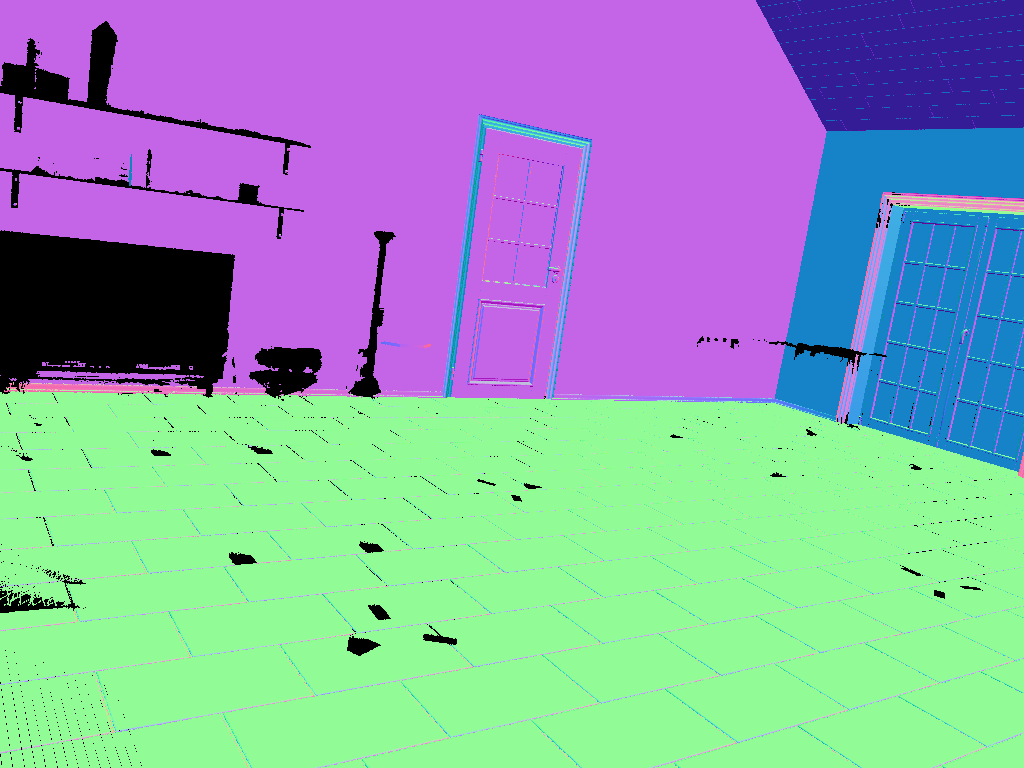

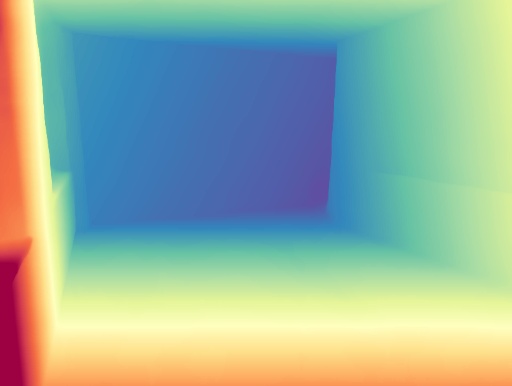

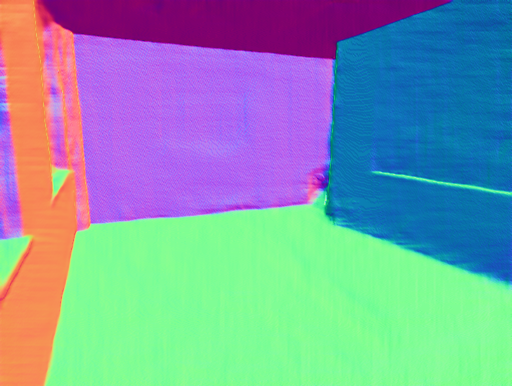

We introduce Room Envelopes, a synthetic dataset that provides dual pointmap representations for indoor scene reconstruction. Each image comes with three complementary views: RGB image, visible surface (depth and normals), and layout surface (depth and normals), with examples below. The visible surface captures all directly visible geometry including furniture and objects, while the layout surface shows structural elements as they would appear without occlusion. This dual representation enables direct supervision for layout reconstruction in occluded regions.

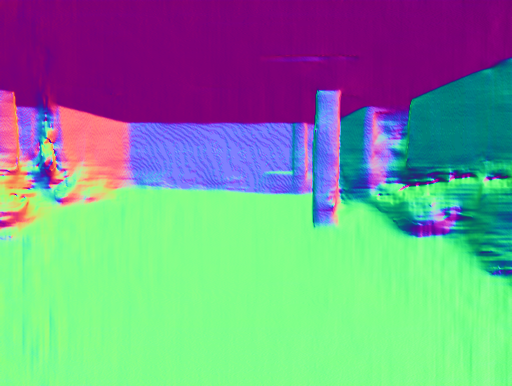

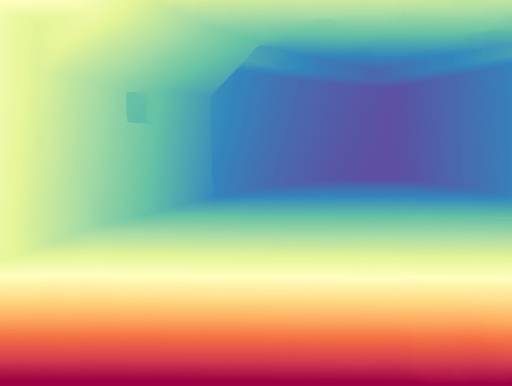

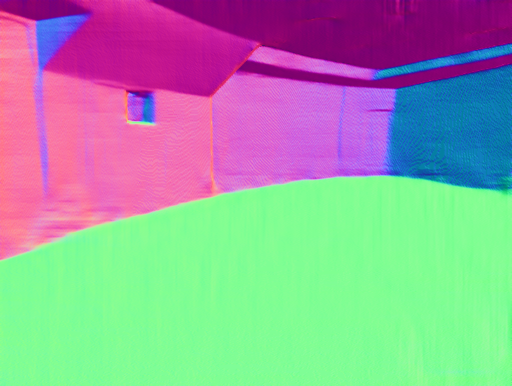

Dataset Examples

RGB Image

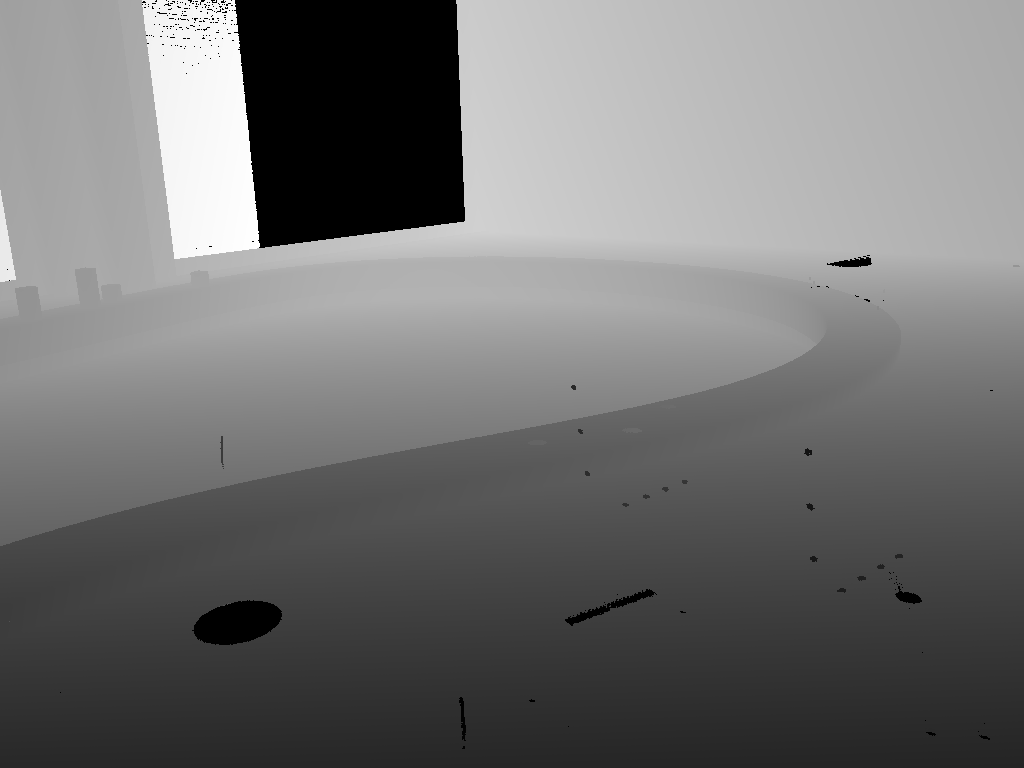

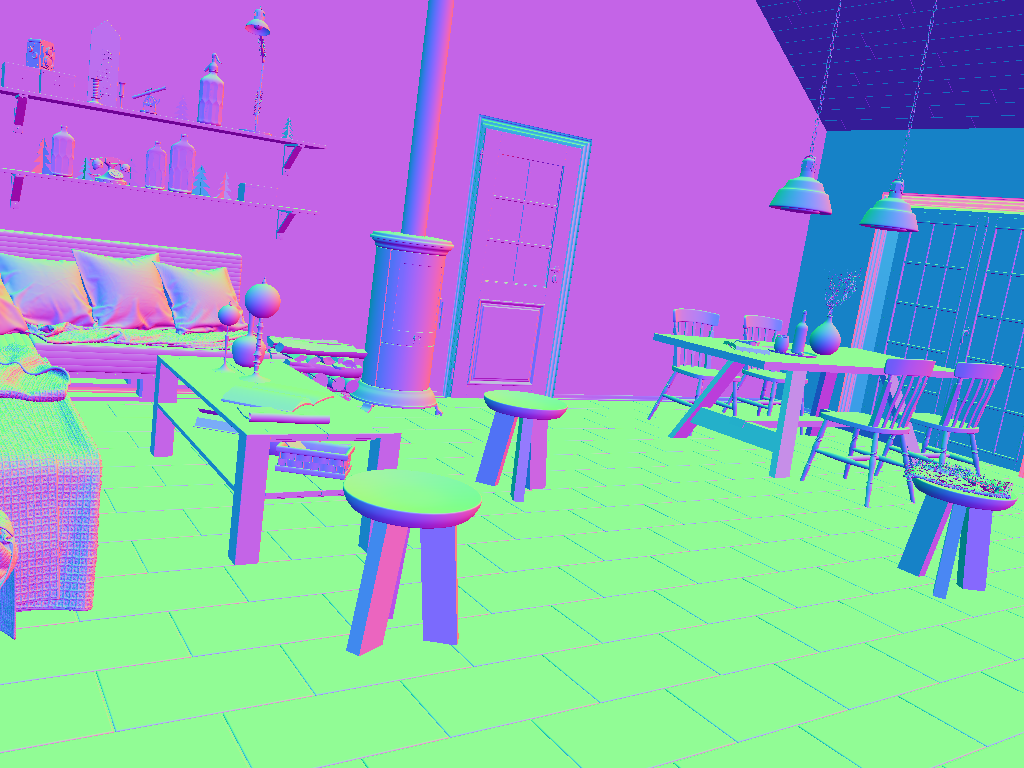

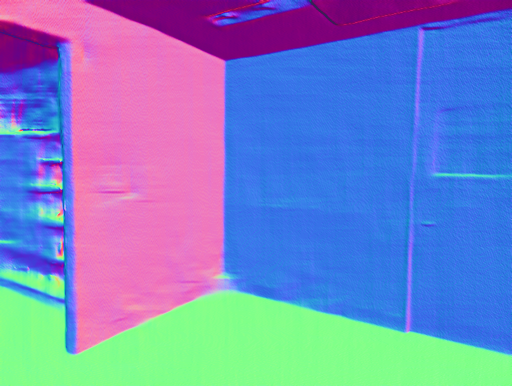

Visible Surface

Depth & Normals

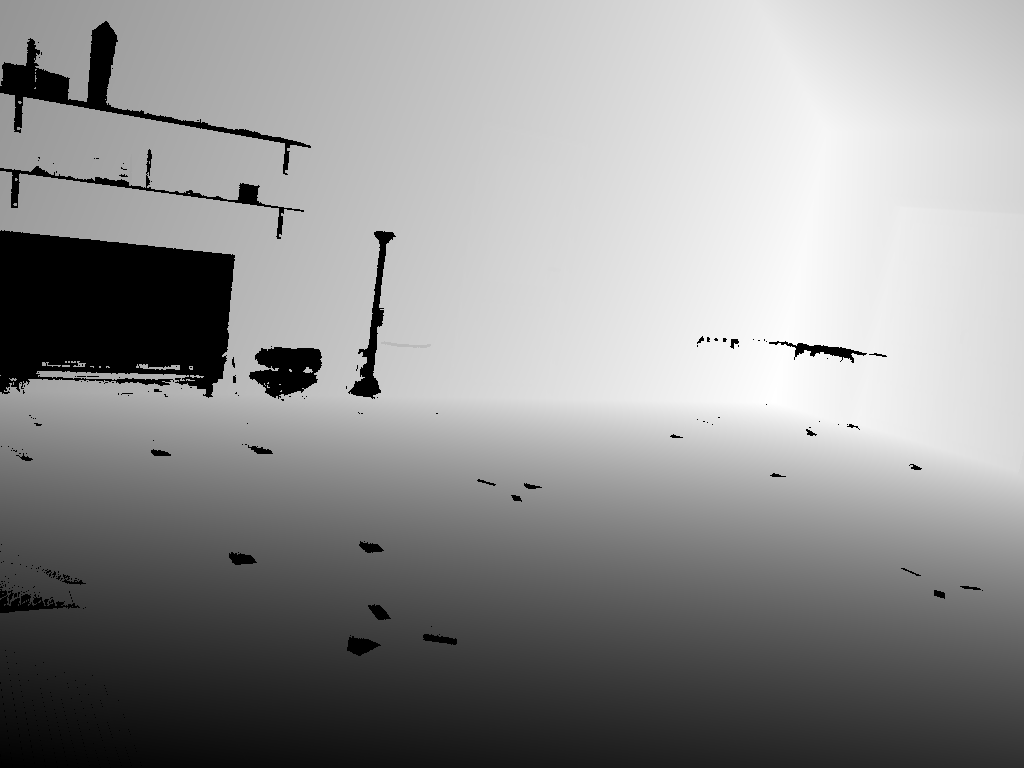

Layout Surface

Depth & Normals

RGB Image

Visible Surface

Depth & Normals

Layout Surface

Depth & Normals

Key Contributions

-

Room Envelopes Dataset: An indoor synthetic dataset providing an image, a visible surface pointmap, and a layout surface pointmap for each camera pose

-

Feed-forward Scene Reconstruction: A model demonstrating effective room layout estimation using this dataset

-

Novel Representation: The only dataset providing both first visible depth and layout depth representations for comprehensive indoor scene understanding

Why Room Envelopes?

Current models for indoor scene reconstruction typically use depth images or layered depth representations for training, but these formats have inherent limitations:

- Depth maps only capture the first visible surface, losing information about underlying room structure

- Layered depth creates artificial discontinuities where continuous surfaces are fragmented across layers

- Feed-forward models struggle at occlusion edges, predicting averaged depth rather than decisive geometry

- Many existing layout estimation methods rely on a planar assumption, assuming all room surfaces (walls, floors, ceilings) are perfectly flat planes, which limits their applicability to more complex architectural geometries

Room Envelopes addresses these limitations by providing:

- Structural Clarity - Unoccluded views of room boundaries

- Direct Supervision - Pixel-aligned supervision for layout estimation

- Less Ambiguity - No uncertainty about layer assignment

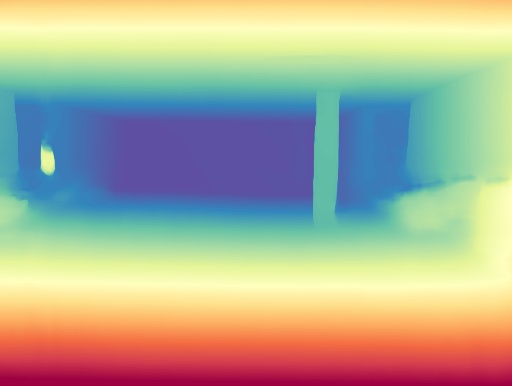

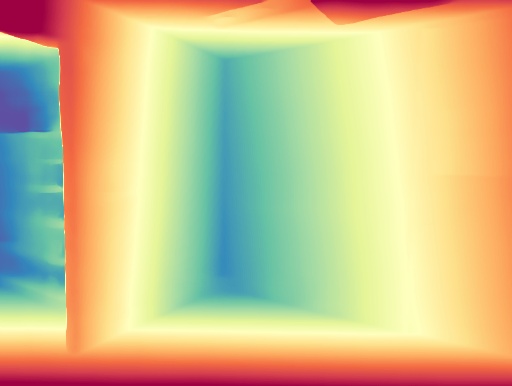

Layout Estimation on Real-World Examples

We trained a layout estimation model by fine-tuning a feed-forward depth estimator, and ran it on real-world indoor scenes. The model, trained exclusively on our synthetic dataset, shows promising generalization capabilities to real-world environments.

Real-World RGB Image

Predicted Layout Depth

Predicted Layout Normals

BibTeX

@inproceedings{bahrami2025roomenvelopes,

title={Room Envelopes: A Synthetic Dataset for Indoor Layout Reconstruction from Images},

author={Bahrami, Sam and Campbell, Dylan},

booktitle={Australasian Joint Conference on Artificial Intelligence},

pages={229--241},

year={2025},

}